Streaming Data

21/08/14 00:49 Filed in: Data pipeline

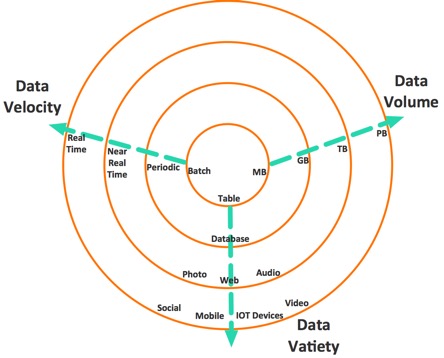

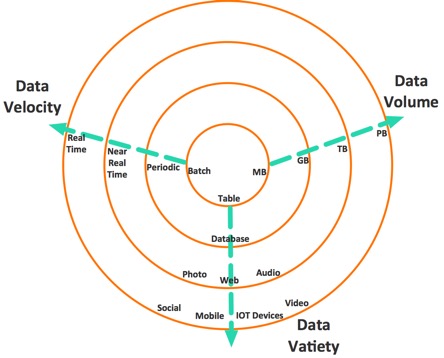

BigData is a collection of data set so large and complex that it becomes difficult to process using on-hand database management tools or traditional applications. The datasets not only contain structured datasets, but also include unstructured datasets. Big data has three characteristics:

The diagram explains the characteristics.

Recently, more and more is getting easily available as streams. The stream data item can be classified into 5Ws data dimensions.

Conventional data processing technologies are now unable to process these kind of volume data within a tolerable elapsed time. In-memory databases also have certain key problems such as larger data size may not fit into memory, moving all data sets into centralized machine is too expensive. To process data as they arrive, the paradigm has changed from the conventional “one-shot” data processing approach to elastic and virtualized datacenter cloud-based data processing frameworks that can mine continuos, high-volume, open-ended data streams.

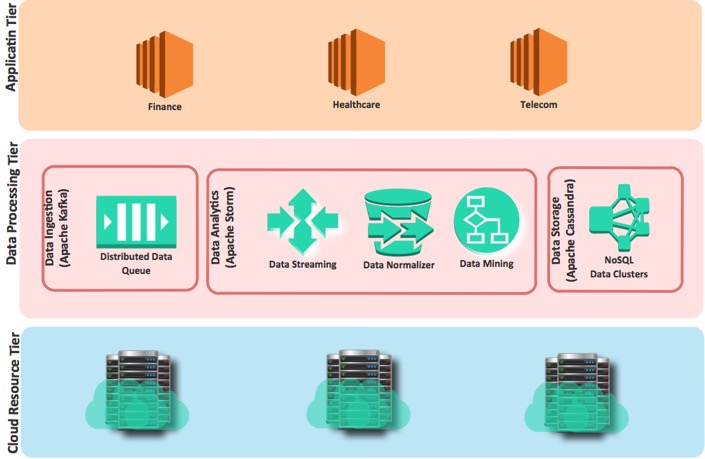

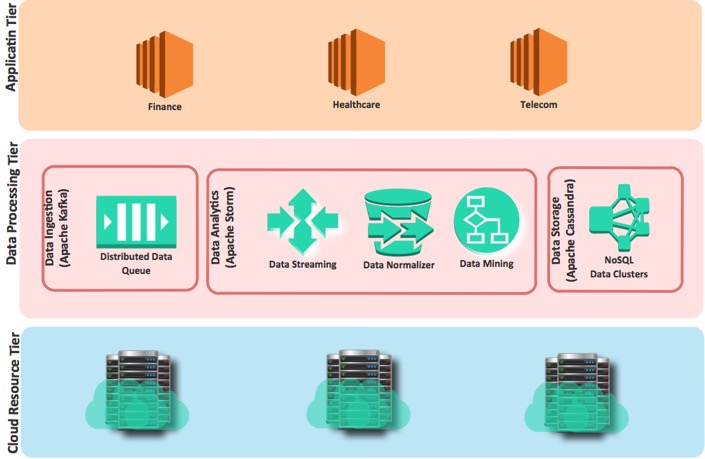

The framework contains three main components.

Following diagram explain these components.

- Volume

- Velocity.

- Variety.

The diagram explains the characteristics.

Recently, more and more is getting easily available as streams. The stream data item can be classified into 5Ws data dimensions.

- What the data is? Video, Image, Text, Number

- Where the data came from? From twitter, Smart phone, Hacker

- When the data occurred? The timestamp of data incidence.

- Who received the data? Friend, Bank account, victim

- Why the data occurred? Sharing photos, Finding new friends, Spreading a virus

- How the data was transferred? By Internet, By email, Online transferred

Conventional data processing technologies are now unable to process these kind of volume data within a tolerable elapsed time. In-memory databases also have certain key problems such as larger data size may not fit into memory, moving all data sets into centralized machine is too expensive. To process data as they arrive, the paradigm has changed from the conventional “one-shot” data processing approach to elastic and virtualized datacenter cloud-based data processing frameworks that can mine continuos, high-volume, open-ended data streams.

The framework contains three main components.

- Data Ingestion: Accepts the data from multiple sources such as social networks, online services, etc.

- Data Analytics: Consist many systems to read, analyze, clean and normalize.

- Data Storage: Provide to store and index data sets.

Following diagram explain these components.