Setup Saltstack

28/04/19 21:39 Filed in: Devops

In this article, I'll provide step-by-step instructions to setup Salt master and 1 minion in two VM instances.

Before start, I assume

- Two VM has been setup in CentOS 7 or above

- Non-root sudo user in the system

- Both server are able to communicate

The summary of two servers are

Master

- OS: CentOS 7

- Hostname: rm-dev01

- IP address: 192.168.4.136

Minion

- OS: CentOS 7

- Hostname: rm-dev02

- IP address: 192.168.4.137

#Step 1:

#Update with latest stable releases.

sudo yum update -y && sudo reboot

#After reboot complete sign in with sudo user.

#Step 2:

#Setup Saltmaster

sudo yum install salt-master

#Step 3:

#Setup master configuration settings

sudo vi /etc/salt/master

#Replace following line "#interface: 0.0.0.0" with

interface: 192.168.4.136

#Find "#hash_type: md5" and replace with

hash_type: sha256

#Save and quit

#Enable as service

sudo systemctl start salt-master.service

sudo systemctl enable salt-master.service

#Step 4:

#Modify firewall rules

#By default, the salt-master service will use ports 4505 and 4506 to communicate with minions. You need to allow traffic through the two ports on the master server. Find out to which zone the eth1 interface belongs:

sudo firewall-cmd --get-active-zones

#You will find out that the eth1 interface belongs to the "public" zone. Therefore, you need to allow traffic through the two ports in the "public" zone:

sudo firewall-cmd --permanent --zone=public --add-port=4505-4506/tcp

sudo firewall-cmd --reload

#Step 5:

#Setting up minion

sudo yum install salt-minion

#After the installation, modify the configuration file as below:

sudo vi /etc/salt/minion

#Find:

#master: salt

#Replace the line with:

master: 10.99.0.10

#Find: "#hash_type: sha256" replace the line with:

hash_type: sha256

#Save and quit:

#Start and enable the salt-minion service:

sudo systemctl start salt-minion.service

sudo systemctl enable salt-minion.service

#After starting up, the salt-minion service will send off a signal to find the SaltStack server.

#list keys

sudo salt-key -L

#you may see following result

Accepted Keys:

Denied Keys:

Unaccepted Keys:

rm-dev02

Rejected Keys:

# accept the key

sudo salt-key --accept=rm-dev02

# You should see following

The following keys are going to be accepted:

Unaccepted Keys:

rm-dev02

Proceed? [n/Y] y

Key for minion rm-dev02 accepted.

#type following

sudo salt-key -L

#this should show

Accepted Keys:

rm-dev02

Denied Keys:

Unaccepted Keys:

Rejected Keys:

Before start, I assume

- Two VM has been setup in CentOS 7 or above

- Non-root sudo user in the system

- Both server are able to communicate

The summary of two servers are

Master

- OS: CentOS 7

- Hostname: rm-dev01

- IP address: 192.168.4.136

Minion

- OS: CentOS 7

- Hostname: rm-dev02

- IP address: 192.168.4.137

#Step 1:

#Update with latest stable releases.

sudo yum update -y && sudo reboot

#After reboot complete sign in with sudo user.

#Step 2:

#Setup Saltmaster

sudo yum install salt-master

#Step 3:

#Setup master configuration settings

sudo vi /etc/salt/master

#Replace following line "#interface: 0.0.0.0" with

interface: 192.168.4.136

#Find "#hash_type: md5" and replace with

hash_type: sha256

#Save and quit

#Enable as service

sudo systemctl start salt-master.service

sudo systemctl enable salt-master.service

#Step 4:

#Modify firewall rules

#By default, the salt-master service will use ports 4505 and 4506 to communicate with minions. You need to allow traffic through the two ports on the master server. Find out to which zone the eth1 interface belongs:

sudo firewall-cmd --get-active-zones

#You will find out that the eth1 interface belongs to the "public" zone. Therefore, you need to allow traffic through the two ports in the "public" zone:

sudo firewall-cmd --permanent --zone=public --add-port=4505-4506/tcp

sudo firewall-cmd --reload

#Step 5:

#Setting up minion

sudo yum install salt-minion

#After the installation, modify the configuration file as below:

sudo vi /etc/salt/minion

#Find:

#master: salt

#Replace the line with:

master: 10.99.0.10

#Find: "#hash_type: sha256" replace the line with:

hash_type: sha256

#Save and quit:

#Start and enable the salt-minion service:

sudo systemctl start salt-minion.service

sudo systemctl enable salt-minion.service

#After starting up, the salt-minion service will send off a signal to find the SaltStack server.

#list keys

sudo salt-key -L

#you may see following result

Accepted Keys:

Denied Keys:

Unaccepted Keys:

rm-dev02

Rejected Keys:

# accept the key

sudo salt-key --accept=rm-dev02

# You should see following

The following keys are going to be accepted:

Unaccepted Keys:

rm-dev02

Proceed? [n/Y] y

Key for minion rm-dev02 accepted.

#type following

sudo salt-key -L

#this should show

Accepted Keys:

rm-dev02

Denied Keys:

Unaccepted Keys:

Rejected Keys:

Benefits of Golang

01/08/18 22:27 Filed in: Programming | Golang

Go is an open source programming language that makes it easy to build simple, reliable, efficient software and provides a robust development experience and avoids many issues that existing programming languages have. The strengths of Go are that it

Easy to learn

Go is so small and simple that the entire language and its underlying concepts can be studied in just a couple of days

Built-in concurrency

Go was written in the age of multi-core CPUs and has easy, high-level CSP-style concurrency built into the language compared the other languages Java, JavaScript, Python, Ruby, C, C++ have been designed in the 1980s-2000s when most CPUs had only one compute core.

Uses OO — the good parts

Go takes a fresh approach at object-orientation with those learnings in mind. It keeps the good parts like encapsulation and message passing. Go avoids inheritance because it is now considered harmful and provides first-class support for composition instead.

Modern standard library

Languages including Java, JavaScript, Python, Ruby were designed before the internet was the ubiquitous computing platform it is today. Hence, the standard libraries of these languages provide only relatively generic support for networking that isn’t optimized for the modern internet. Go was created a decade ago when the internet was already in full swing. Go’s standard library allows creating even sophisticated network services without third-party libraries.

Extremely fast compiler

Go is designed from the ground up to make compilation efficient and as a result its compiler is so fast that there are almost no compilation delays. This gives a Go developer instant feedback similar to scripting languages, with the added benefits of static type checking.

Easy cross compilation

Go supports compilation to many platforms like macOS, Linux, Windows, BSD, ARM, and more. Go can compile binaries for all these platforms out of the box. This makes deployment from a single machine easy.

Executes very fast

Go runs close to the speed of C. Unlike JITed languages (Java, JavaScript, Python, etc), Go binaries require no startup or warmup time because they ship as compiled and fully optimized native code

Small memory footprint & deployment size

Runtimes like the JVM, Python, or Node don’t just load your program code when running it. They also load large and highly complex pieces of infrastructure to compile and optimize your program each time you run it. This makes their startup time slower and causes them to use large amounts (hundreds of MB) of RAM. Go processes have less overhead because they are already fully compiled and optimized and just need to run

Easy to learn

Go is so small and simple that the entire language and its underlying concepts can be studied in just a couple of days

Built-in concurrency

Go was written in the age of multi-core CPUs and has easy, high-level CSP-style concurrency built into the language compared the other languages Java, JavaScript, Python, Ruby, C, C++ have been designed in the 1980s-2000s when most CPUs had only one compute core.

Uses OO — the good parts

Go takes a fresh approach at object-orientation with those learnings in mind. It keeps the good parts like encapsulation and message passing. Go avoids inheritance because it is now considered harmful and provides first-class support for composition instead.

Modern standard library

Languages including Java, JavaScript, Python, Ruby were designed before the internet was the ubiquitous computing platform it is today. Hence, the standard libraries of these languages provide only relatively generic support for networking that isn’t optimized for the modern internet. Go was created a decade ago when the internet was already in full swing. Go’s standard library allows creating even sophisticated network services without third-party libraries.

Extremely fast compiler

Go is designed from the ground up to make compilation efficient and as a result its compiler is so fast that there are almost no compilation delays. This gives a Go developer instant feedback similar to scripting languages, with the added benefits of static type checking.

Easy cross compilation

Go supports compilation to many platforms like macOS, Linux, Windows, BSD, ARM, and more. Go can compile binaries for all these platforms out of the box. This makes deployment from a single machine easy.

Executes very fast

Go runs close to the speed of C. Unlike JITed languages (Java, JavaScript, Python, etc), Go binaries require no startup or warmup time because they ship as compiled and fully optimized native code

Small memory footprint & deployment size

Runtimes like the JVM, Python, or Node don’t just load your program code when running it. They also load large and highly complex pieces of infrastructure to compile and optimize your program each time you run it. This makes their startup time slower and causes them to use large amounts (hundreds of MB) of RAM. Go processes have less overhead because they are already fully compiled and optimized and just need to run

Create private ethereum in Mac environment

21/04/18 09:07 Filed in: Blockchain

Using homebrew is easier to setup. To setup brew in your Mac try this https://brew.sh/

$brew update

$brew upgrade

$brew tap ethereum/ethereum

$brew install ethereum

For this setup I use ~/code/ethereum as my root directory for development setup. Create devethchain directory.

$mkdir devethchain

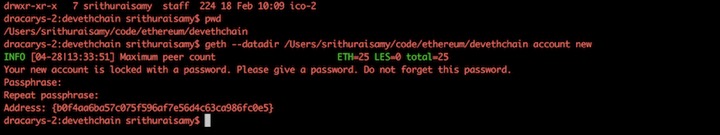

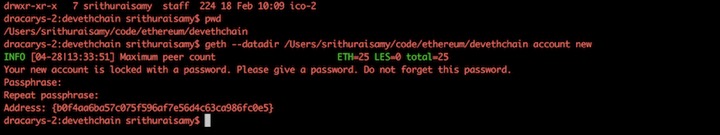

Create accounts

$geth --datadir ~/code/ethereum/devethchain account new

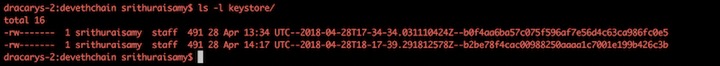

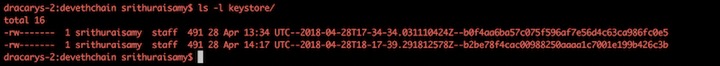

Create multiple accounts. After creating if you list files in keystore, you should see following files

$ls -l keystore

Get the account ID

In genesis file following values can be filled in

- chainId — chain’s identifier, is used in replay protection.

- homesteadBlock, eip155Block, eip158Block, byzantiumBlock — these relate to chain forking and versioning, for new blockchain leave them as 0 value.

This is to mine a block. Setting this value low (~10–10000) is helpful in a private blockchain as it lets you mine blocks quickly, which equals fast transactions, and plenty of ETH to test with. For comparison, the Ethereum mainnet Genesis file defines a difficulty of 17179869184.

This is the the total amount of gas that can be used in each block. With such a low mining difficulty, blocks will be moving pretty quick, but you should still set this value pretty high to avoid hitting the limit and slowing down your network.

Here you can allocate ETH to specific addresses you have created before. You will need to add the account to your private chain in order to use it, and to do that you need access to the keystore/utc file. The addresses provided are not real addresses, they are just examples. Here we allocate 100,000 and 120,000 ETH respectively.

$cd ~/code/ethereum/devethchain

$touch devgenesis.json

Add following into devgenesis.json file

{

"config": {

"chainId": 1966,

"homesteadBlock": 0,

"eip155Block": 0,

"eip158Block": 0,

"byzantiumBlock": 0

},

"difficulty": "400",

"gasLimit": "2000000",

"alloc": {

"b0f4aa6ba57c075f596af7e56d4c63ca986fc0e5": {

"balance": "100000000000000000000000"

},

"b2be78f4cac00988250aaaa1c7001e199b426c3b": {

"balance": "120000000000000000000000"

}

}

}

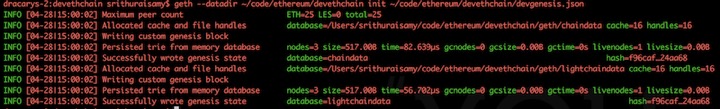

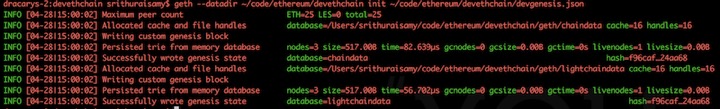

geth --datadir ~/code/ethereum/devethchain init ~/code/ethereum/devethchain/devgenesis.json

Start private chain

To keep private network you have to provide Networkid value. You can use any number here, I have used 196609, but other peers joining your network must use the same one.

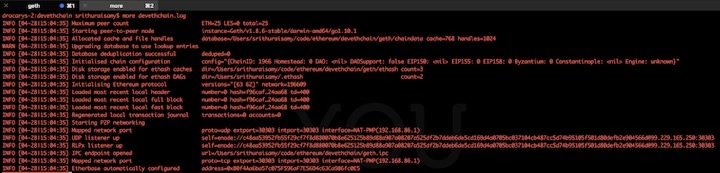

geth --datadir ~/code/ethereum/devethchain --networkid 196609 console 2>> devethchain.log

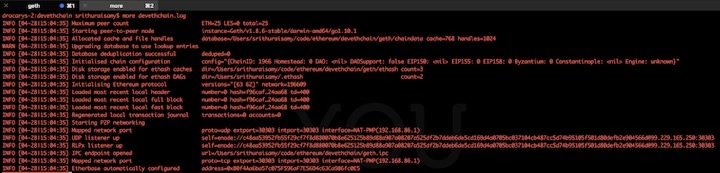

Output should be like above. This is the geth JavaScript console. Any command with the symbol > should be typed here.

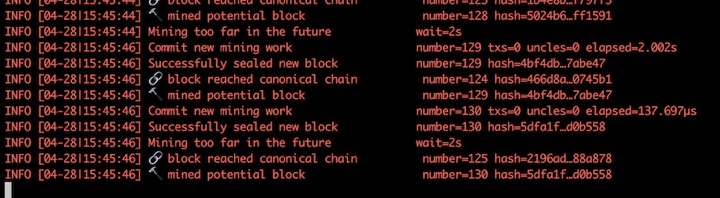

To view logs, open another terminal and tail the log file created after starting the private chain. The log file should be similar to below.

You can create new account in the java script console or import UTC file into keystone directory. I'm going to use one of account as default account created in genesis Gile.

Find default account, type the following command in javascript console.

>eth.coinbase

This will display first account in alloc. Incase if you want to change second one type following in javascript console.

>miner.setEtherbase(web3.eth.accounts[1])

To check the balance

>eth.getBalance(eth.coinbase)

To start the mining

>miner.start()

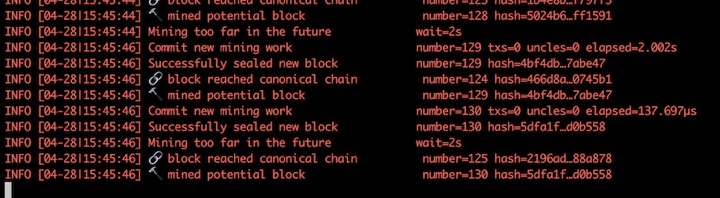

In the other terminal you can see the mining action in the log file as below.

If you want to stop mining

To get node info

>admin.nodeInfo.enode

You may see message similar to below with different address.

"enode://c48aa53952fb55f29cf7f8d880070b8e625125b89d88a907a08207a525df2b7ddeb6de5cd169d4a0705bc037104cb487cc5d74b95105f501d80defb2e904566d@[::]:30303"

This information you may need to add more peers

Install geth

$brew update

$brew upgrade

$brew tap ethereum/ethereum

$brew install ethereum

For this setup I use ~/code/ethereum as my root directory for development setup. Create devethchain directory.

$mkdir devethchain

Create accounts

$geth --datadir ~/code/ethereum/devethchain account new

Create multiple accounts. After creating if you list files in keystore, you should see following files

$ls -l keystore

Get the account ID

Create Genesis file

In genesis file following values can be filled in

config

- chainId — chain’s identifier, is used in replay protection.

- homesteadBlock, eip155Block, eip158Block, byzantiumBlock — these relate to chain forking and versioning, for new blockchain leave them as 0 value.

difficulty

This is to mine a block. Setting this value low (~10–10000) is helpful in a private blockchain as it lets you mine blocks quickly, which equals fast transactions, and plenty of ETH to test with. For comparison, the Ethereum mainnet Genesis file defines a difficulty of 17179869184.

gasLimit

This is the the total amount of gas that can be used in each block. With such a low mining difficulty, blocks will be moving pretty quick, but you should still set this value pretty high to avoid hitting the limit and slowing down your network.

alloc

Here you can allocate ETH to specific addresses you have created before. You will need to add the account to your private chain in order to use it, and to do that you need access to the keystore/utc file. The addresses provided are not real addresses, they are just examples. Here we allocate 100,000 and 120,000 ETH respectively.

$cd ~/code/ethereum/devethchain

$touch devgenesis.json

Add following into devgenesis.json file

{

"config": {

"chainId": 1966,

"homesteadBlock": 0,

"eip155Block": 0,

"eip158Block": 0,

"byzantiumBlock": 0

},

"difficulty": "400",

"gasLimit": "2000000",

"alloc": {

"b0f4aa6ba57c075f596af7e56d4c63ca986fc0e5": {

"balance": "100000000000000000000000"

},

"b2be78f4cac00988250aaaa1c7001e199b426c3b": {

"balance": "120000000000000000000000"

}

}

}

Initialize your node

geth --datadir ~/code/ethereum/devethchain init ~/code/ethereum/devethchain/devgenesis.json

Start private chain

To keep private network you have to provide Networkid value. You can use any number here, I have used 196609, but other peers joining your network must use the same one.

geth --datadir ~/code/ethereum/devethchain --networkid 196609 console 2>> devethchain.log

Output should be like above. This is the geth JavaScript console. Any command with the symbol > should be typed here.

To view logs, open another terminal and tail the log file created after starting the private chain. The log file should be similar to below.

You can create new account in the java script console or import UTC file into keystone directory. I'm going to use one of account as default account created in genesis Gile.

Find default account, type the following command in javascript console.

>eth.coinbase

This will display first account in alloc. Incase if you want to change second one type following in javascript console.

>miner.setEtherbase(web3.eth.accounts[1])

To check the balance

>eth.getBalance(eth.coinbase)

To start the mining

>miner.start()

In the other terminal you can see the mining action in the log file as below.

If you want to stop mining

To get node info

>admin.nodeInfo.enode

You may see message similar to below with different address.

"enode://c48aa53952fb55f29cf7f8d880070b8e625125b89d88a907a08207a525df2b7ddeb6de5cd169d4a0705bc037104cb487cc5d74b95105f501d80defb2e904566d@[::]:30303"

This information you may need to add more peers

Dependency management in Golang

15/04/18 18:14 Filed in: Golang

Glide was one of the top tool for dependency management for Go. The Glide may become obsolete because of Dev packages introduced in Golang.

dep is a prototype dependency management tool for Go. It requires Go 1.9 or newer to compile. dep is safe for production use. Initially there was planning to integrate deployment into go 1.10.x version but still it has long way. So now you have to install and use separately.

Following are the examples for dep command.

dep init set up a new project

dep ensure install the project's dependencies

dep ensure -update update the locked versions of all dependencies

dep ensure -add github.com/pkg/errors add a dependency to the project

- Message from Glide team :

dep is a prototype dependency management tool for Go. It requires Go 1.9 or newer to compile. dep is safe for production use. Initially there was planning to integrate deployment into go 1.10.x version but still it has long way. So now you have to install and use separately.

Install dep package

- $ brew install dep

- $ brew upgrade dep

Create the project inside $GOPATH

- Create new project under $GOPATH/src/elasticcloudapps.com/hellodep

- package main

- import (

- )

- func main() {

- }

- Initialize dep

- Dep needs two files called Gopkg.lock and Gopkg.toml. You can initialize these files with following

- $ dep init

- Locking in master (d644981) for transitive dep golang.org/x/crypto

- Locking in master (2281fa9) for transitive dep golang.org/x/sys

- Using ^1.0.5 as constraint for direct dep github.com/sirupsen/logrus

- Locking in v1.0.5 (c155da1) for direct dep github.com/sirupsen/logrus

- After this the new files will be generated.

Following are the examples for dep command.

dep init set up a new project

dep ensure install the project's dependencies

dep ensure -update update the locked versions of all dependencies

dep ensure -add github.com/pkg/errors add a dependency to the project

Easy way to create ICO on Stellar network

07/04/18 21:54 Filed in: Blockchain

An Initial Coin Offering (ICO) is used by startups to bypass the rigorous and regulated capital-raising process required by venture capitalists or banks. In an ICO campaign, a percentage of the cryptocurrency is sold to early backers of the project in exchange for legal tender or other cryptocurrencies. The majority of the ICOs today are made on Ethereum (ERC20 token). But Ethereum is slow running transaction with high cost. If required to raise funds for the startup then Stellar can do a lot faster, better and cheaper, it is an open source platform for developing financial applications.

The advantages are:

• Less transaction fees

• Perform almost 100 time faster

• Less development effort to get ICO online.

• Tokens are available on Stellar Distributed Exchange in a day, where anyone can trade without waiting for it to get listed on other exchanges.

Stellar is an infrastructure for payments; connects banks, payments systems and people around the world, designed from the start to make it really easy for financial institutions to issue tokens representing fiat currencies. Also has built in distributed exchange which allows people to not only buy and sell currencies like in a typical foreign exchange way but also to seamlessly convert from one currency to another during cross border or cross currency transactions.

In this blog I have described how to create custom token on Stellar Network and accepting BTC/ETH contributions in an ICO. We will be using stellar laboratory and test network for this article. The functionalities provided here can be done through Stellar SDK as well. We need two accounts on Stellar to proceed, one is “Issuing account” and another is “Distributing account”. Although you could create tokens using one account but its always good to keep issuing account separate and use assets from distributing account.

Following tasks required:

1. Create issuer and distribution account. 2. Add trust

3. Issue Token

4. Freeze total supply

5. Selling token and accepting XLM

Accounts are the central data structure in Stellar. Accounts are identified by a public key and saved in the ledger and everything in the ledger is owned by a particular account. Account access is controlled by public/private key cryptography. For an account to perform a transaction–e.g., make a payment–the transaction must be signed by the private key that corresponds to that account’s public key.

Accounts have the following fields:

Account ID

The public key that was first used to create the account. You can replace the key used for signing the account’s transactions with a different public key, but the original account ID will always be used to identify the account.

Balance

The number of lumens held by the account. The balance is denominated in 1/10,000,000th of a lumen, the smallest divisible unit of a lumen.

Sequence number

The current transaction sequence number of the account. This number starts equal to the ledger number at which the account was created.

Number of subentries

Number of other entries the account owns. This number is used to calculate the account’s minimum balance.

Inflation destination

(optional) Account designated to receive inflation. Every account can vote to send inflation to a destination account.

Flags

Currently there are three flags, used by issuers of assets.

- Authorization required (0x1): Requires the issuing account to give other accounts permission before they can hold the issuing account’s credit.

- Authorization revocable (0x2): Allows the issuing account to revoke its credit held by other accounts.

- Authorization immutable (0x4): If this is set then none of the authorization flags can be set and the account can never be deleted.

Home domain

A domain name that can optionally be added to the account. Clients can look up a stellar.toml from this domain. This should be in the format of a fully qualified domain name such as example.com. The federation protocol can use the home domain to look up more details about a transaction’s memo or address details about an account. For more on federation, see the federation guide.

Thresholds

Operations have varying levels of access. This field specifies thresholds for low-, medium-, and high-access levels, as well as the weight of the master key. For more info, see multi-sig.

Signers

Used for multi-sig. This field lists other public keys and their weights, which can be used to authorize transactions for this account.

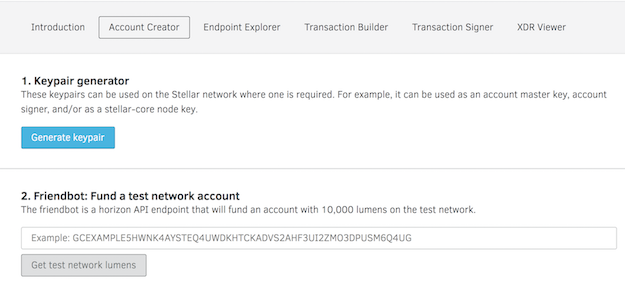

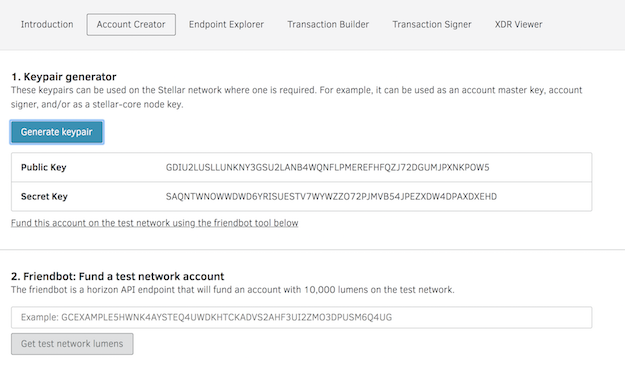

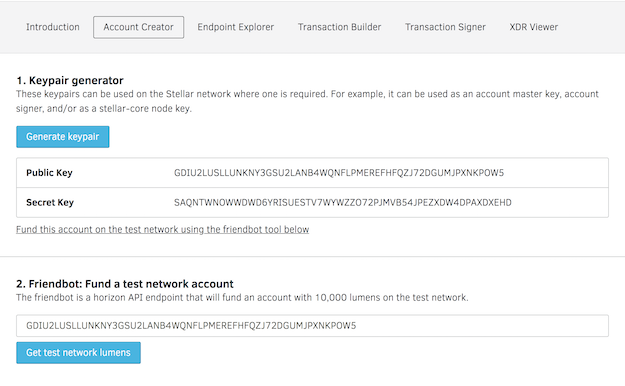

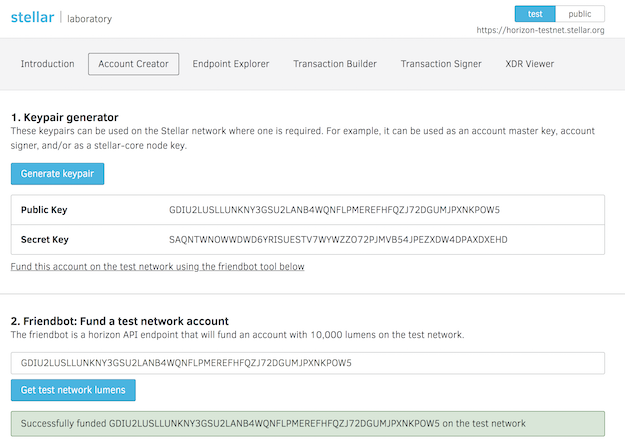

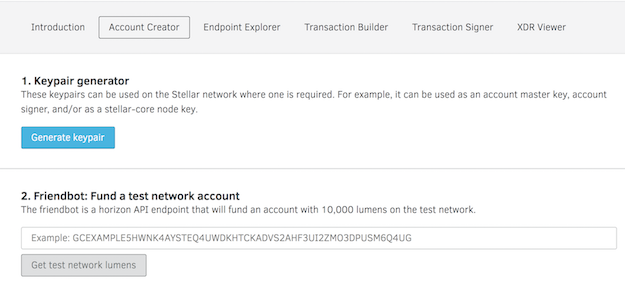

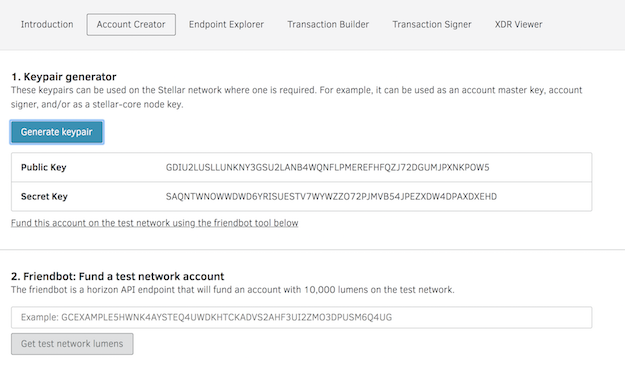

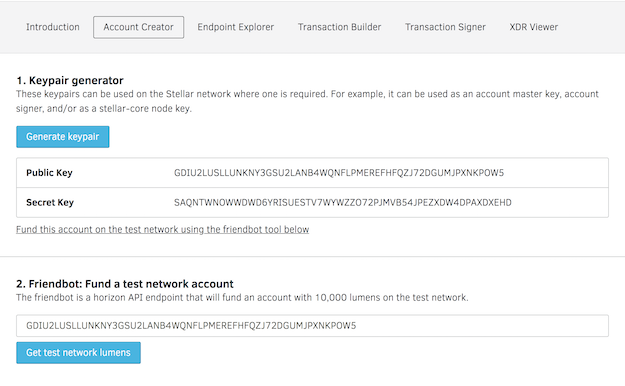

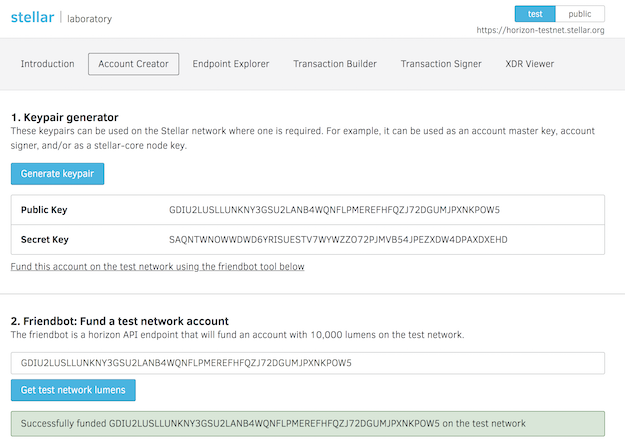

The process for creating issuing account and distributing account are same, its just a general concept to keep things separate. Save details of this account as well, we will be using this account to sell tokens. Follow the below instructions to create issuer and distribution accounts.

Steps

1. Goto https://www.stellar.org/laboratory/

2. Click on account creator tab.

3. Generate key pair - Save the public address and secret.

4. Fund the test Account - Use Friendbot available on the same tab to fund the test account.

Images are attached explaining screen flow.

The issuer test account details can be viewed by using GET URL

https://horizon-testnet.stellar.org/accounts/GBCVYWPJWSHBZCON75LQY5ZW7JWZRA63YU4EEILGFHZENMPZRT25PWQW

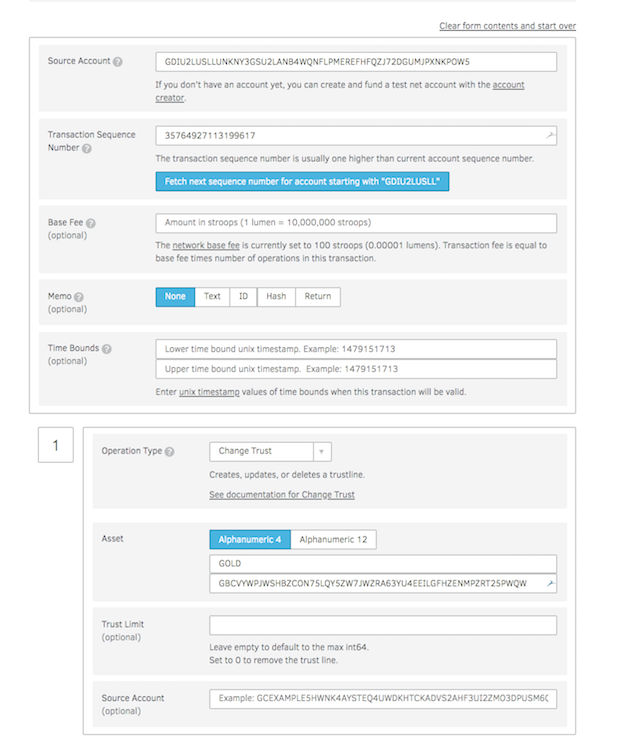

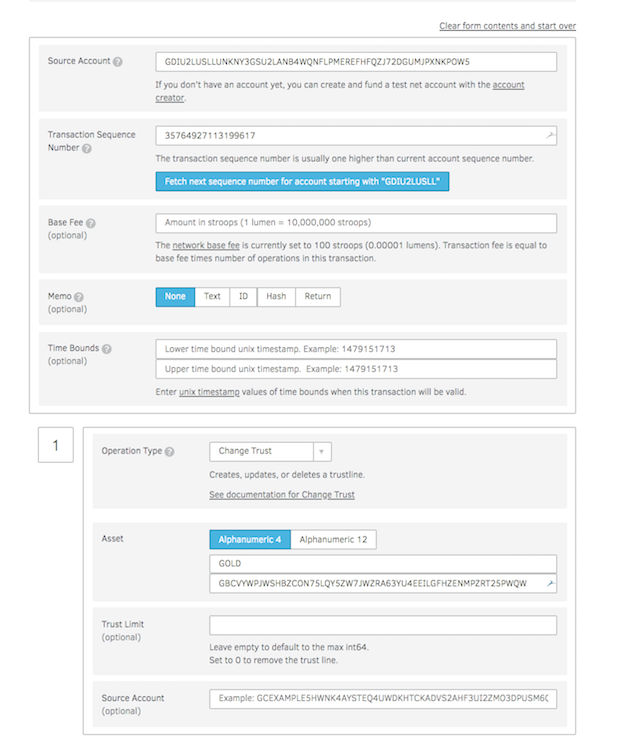

The distribution needs to trust the asset to receive tokens from issuing account. When someone buys token from the exchange they are already trusting the asset, but someone who has not purchased the asset before has to add the trustline in order to receive your tokens. Adding trust is easy as creating a transaction on stellar network.

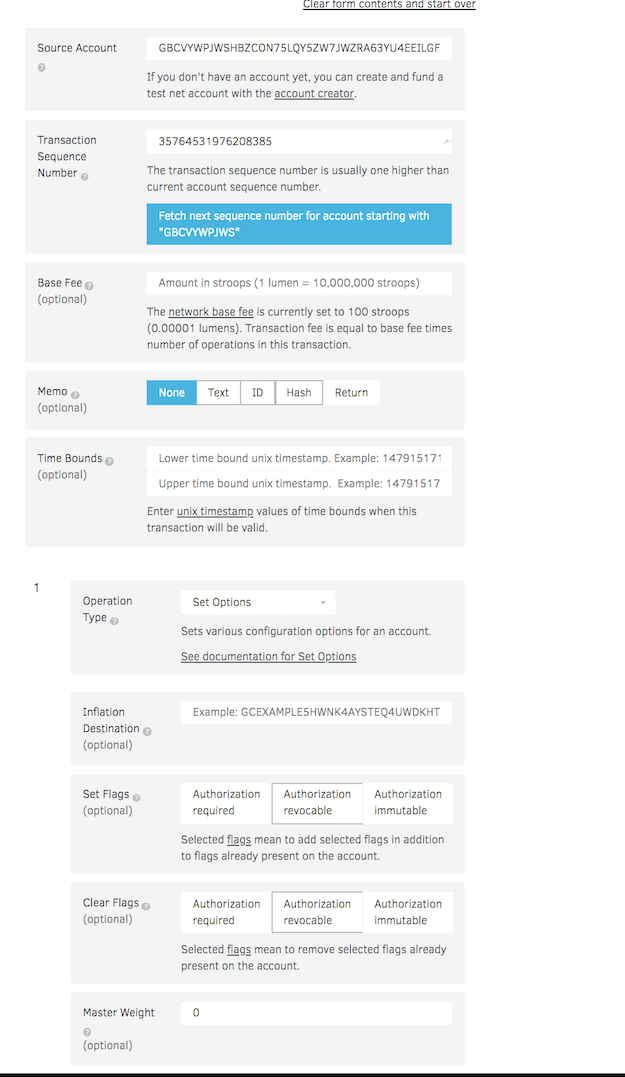

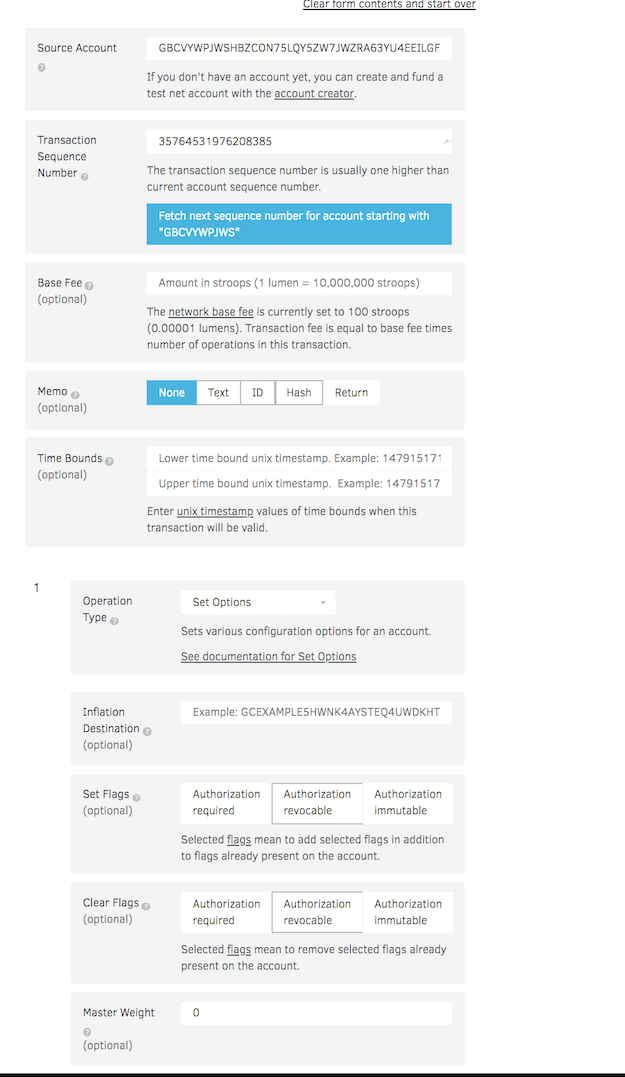

Go to the Transaction builder tab in the laboratory and fill in the following

1. Source account with distributing account number.

2. Click on “Fetch next sequence number ….”

3. Change operation type to “Change Trust”

4. Provide Asset code e.g: GOLD

5. Click on Sign in Transaction signer

If the transaction is successful you have successfully added trust for the new asset, which issuing account is about to send.

Assets

The Stellar distributed network can be used to track, hold, and transfer any type of asset: dollars, euros, bitcoin, stocks, gold, and other tokens of value. Any asset on the network can be traded and exchanged with any other. Other than lumens (see below), all assets have

- Asset type: e.g., USD or BTC

- Issuer: the account that created the asset

Trustlines

When you hold assets in Stellar, you’re actually holding credit from a particular issuer. The issuer has agreed that it will trade you its credit on the Stellar network for the corresponding asset–e.g., fiat currency, precious metal–outside of Stellar. When you hold an asset, you must trust the issuer to properly redeem its credit. Since users of Stellar will not want to trust just any issuer, accounts must explicitly trust an issuing account before they’re able to hold the issuer’s credit.

Lumens (XLM)

Lumens (XLM) are the native currency of the network. A lumen is the only asset type that can be used on the Stellar network that doesn’t require an issuer or a trustline. Any account can hold lumens. You can trade lumens for other assets in the network.

Anchors: issuing assets

Any account can issue assets on the Stellar network. Entities that issue assets are called anchors. Anchors can be run by individuals, small businesses, local communities, nonprofits, organizations, etc. Any type of financial institution–a bank, a payment processor–can be an anchor. Each anchor has an issuing account from which it issues the asset. As an anchor, when you issue an asset, you give it an asset code. Assets are uniquely identified by the asset code and the issuer. Ultimately, it’s up to the issuer to set the asset code. By convention, however, currencies should be represented by the appropriate ISO 4217 code. For stocks and bonds, use the appropriate ISIN number. For your orange, goat, favor, or beer anchors, you’re on your own–invent an appropriate code!

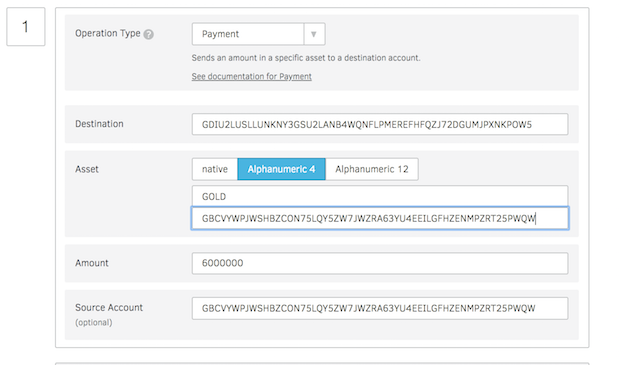

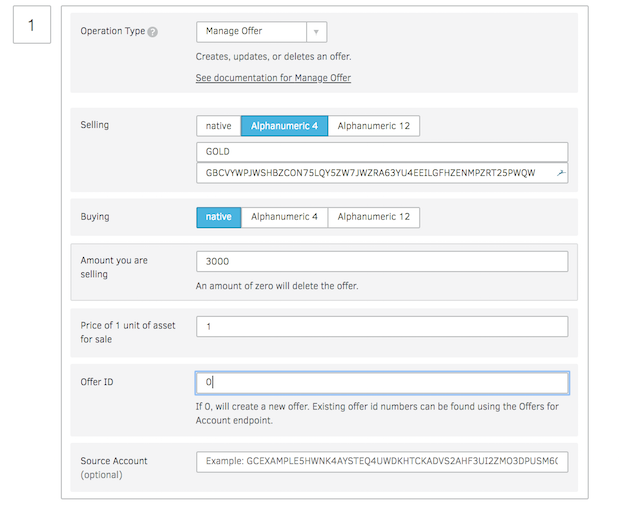

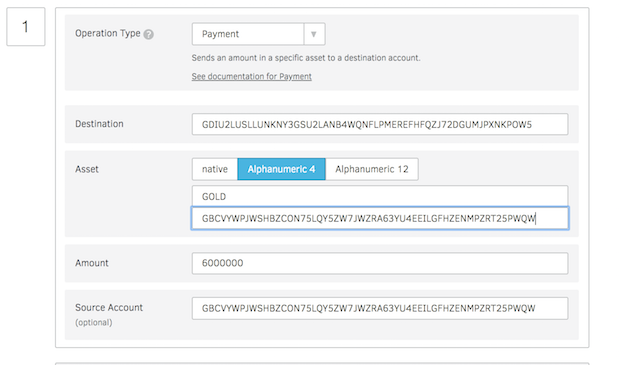

This section describe the payment process to the distributing account from issuing account of total tokens you want to generate. Go to transaction builder and refer below screen to fill up the form. Enter source address as issuing account address, fetch the next transaction number and than set option. After filling the information click on Sign in Transaction signers. If the transaction is successful you have successfully created a token on Stellar platform and sent it to distributing account from where it can be sold.

After completing the payment the issuing account should be freezes so no more tokens can be generated later. See below screen to set the freeze process.

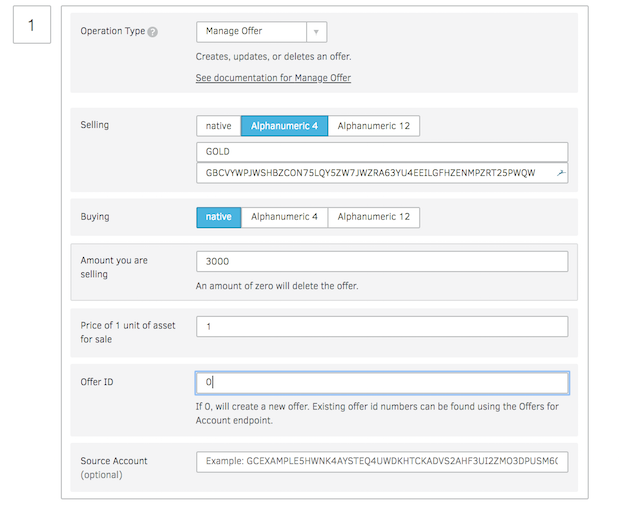

Using Stellar decentralized exchange, you can put a sell order with desired price and any one can buy the tokens. This accepts in XLM (native option) in Stellar platform. To accept in BTC/ETH need more development effort required.

The advantages are:

• Less transaction fees

• Perform almost 100 time faster

• Less development effort to get ICO online.

• Tokens are available on Stellar Distributed Exchange in a day, where anyone can trade without waiting for it to get listed on other exchanges.

Stellar is an infrastructure for payments; connects banks, payments systems and people around the world, designed from the start to make it really easy for financial institutions to issue tokens representing fiat currencies. Also has built in distributed exchange which allows people to not only buy and sell currencies like in a typical foreign exchange way but also to seamlessly convert from one currency to another during cross border or cross currency transactions.

In this blog I have described how to create custom token on Stellar Network and accepting BTC/ETH contributions in an ICO. We will be using stellar laboratory and test network for this article. The functionalities provided here can be done through Stellar SDK as well. We need two accounts on Stellar to proceed, one is “Issuing account” and another is “Distributing account”. Although you could create tokens using one account but its always good to keep issuing account separate and use assets from distributing account.

Following tasks required:

1. Create issuer and distribution account. 2. Add trust

3. Issue Token

4. Freeze total supply

5. Selling token and accepting XLM

Create issuer and distribution account

Accounts are the central data structure in Stellar. Accounts are identified by a public key and saved in the ledger and everything in the ledger is owned by a particular account. Account access is controlled by public/private key cryptography. For an account to perform a transaction–e.g., make a payment–the transaction must be signed by the private key that corresponds to that account’s public key.

Accounts have the following fields:

Account ID

The public key that was first used to create the account. You can replace the key used for signing the account’s transactions with a different public key, but the original account ID will always be used to identify the account.

Balance

The number of lumens held by the account. The balance is denominated in 1/10,000,000th of a lumen, the smallest divisible unit of a lumen.

Sequence number

The current transaction sequence number of the account. This number starts equal to the ledger number at which the account was created.

Number of subentries

Number of other entries the account owns. This number is used to calculate the account’s minimum balance.

Inflation destination

(optional) Account designated to receive inflation. Every account can vote to send inflation to a destination account.

Flags

Currently there are three flags, used by issuers of assets.

- Authorization required (0x1): Requires the issuing account to give other accounts permission before they can hold the issuing account’s credit.

- Authorization revocable (0x2): Allows the issuing account to revoke its credit held by other accounts.

- Authorization immutable (0x4): If this is set then none of the authorization flags can be set and the account can never be deleted.

Home domain

A domain name that can optionally be added to the account. Clients can look up a stellar.toml from this domain. This should be in the format of a fully qualified domain name such as example.com. The federation protocol can use the home domain to look up more details about a transaction’s memo or address details about an account. For more on federation, see the federation guide.

Thresholds

Operations have varying levels of access. This field specifies thresholds for low-, medium-, and high-access levels, as well as the weight of the master key. For more info, see multi-sig.

Signers

Used for multi-sig. This field lists other public keys and their weights, which can be used to authorize transactions for this account.

The process for creating issuing account and distributing account are same, its just a general concept to keep things separate. Save details of this account as well, we will be using this account to sell tokens. Follow the below instructions to create issuer and distribution accounts.

Steps

1. Goto https://www.stellar.org/laboratory/

2. Click on account creator tab.

3. Generate key pair - Save the public address and secret.

4. Fund the test Account - Use Friendbot available on the same tab to fund the test account.

Images are attached explaining screen flow.

The issuer test account details can be viewed by using GET URL

https://horizon-testnet.stellar.org/accounts/GBCVYWPJWSHBZCON75LQY5ZW7JWZRA63YU4EEILGFHZENMPZRT25PWQW

Add trust

The distribution needs to trust the asset to receive tokens from issuing account. When someone buys token from the exchange they are already trusting the asset, but someone who has not purchased the asset before has to add the trustline in order to receive your tokens. Adding trust is easy as creating a transaction on stellar network.

Go to the Transaction builder tab in the laboratory and fill in the following

1. Source account with distributing account number.

2. Click on “Fetch next sequence number ….”

3. Change operation type to “Change Trust”

4. Provide Asset code e.g: GOLD

5. Click on Sign in Transaction signer

If the transaction is successful you have successfully added trust for the new asset, which issuing account is about to send.

Assets

The Stellar distributed network can be used to track, hold, and transfer any type of asset: dollars, euros, bitcoin, stocks, gold, and other tokens of value. Any asset on the network can be traded and exchanged with any other. Other than lumens (see below), all assets have

- Asset type: e.g., USD or BTC

- Issuer: the account that created the asset

Trustlines

When you hold assets in Stellar, you’re actually holding credit from a particular issuer. The issuer has agreed that it will trade you its credit on the Stellar network for the corresponding asset–e.g., fiat currency, precious metal–outside of Stellar. When you hold an asset, you must trust the issuer to properly redeem its credit. Since users of Stellar will not want to trust just any issuer, accounts must explicitly trust an issuing account before they’re able to hold the issuer’s credit.

Lumens (XLM)

Lumens (XLM) are the native currency of the network. A lumen is the only asset type that can be used on the Stellar network that doesn’t require an issuer or a trustline. Any account can hold lumens. You can trade lumens for other assets in the network.

Anchors: issuing assets

Any account can issue assets on the Stellar network. Entities that issue assets are called anchors. Anchors can be run by individuals, small businesses, local communities, nonprofits, organizations, etc. Any type of financial institution–a bank, a payment processor–can be an anchor. Each anchor has an issuing account from which it issues the asset. As an anchor, when you issue an asset, you give it an asset code. Assets are uniquely identified by the asset code and the issuer. Ultimately, it’s up to the issuer to set the asset code. By convention, however, currencies should be represented by the appropriate ISO 4217 code. For stocks and bonds, use the appropriate ISIN number. For your orange, goat, favor, or beer anchors, you’re on your own–invent an appropriate code!

Issue Token

This section describe the payment process to the distributing account from issuing account of total tokens you want to generate. Go to transaction builder and refer below screen to fill up the form. Enter source address as issuing account address, fetch the next transaction number and than set option. After filling the information click on Sign in Transaction signers. If the transaction is successful you have successfully created a token on Stellar platform and sent it to distributing account from where it can be sold.

Freeze total supply

After completing the payment the issuing account should be freezes so no more tokens can be generated later. See below screen to set the freeze process.

Selling token and accepting XLM

Using Stellar decentralized exchange, you can put a sell order with desired price and any one can buy the tokens. This accepts in XLM (native option) in Stellar platform. To accept in BTC/ETH need more development effort required.

References

- Concepts definitions are from Stellar site : https://www.stellar.org/developers/guides/concepts/accounts.html

- Create ICO blog from Ashish Prajapati : https://medium.com/@ashisherc/create-an-ico-on-stellar-network-with-custom-token-7b6aab349f33

Blockchain Architecture - Part 2: Application Templates

01/02/18 01:30 Filed in: Blockchain

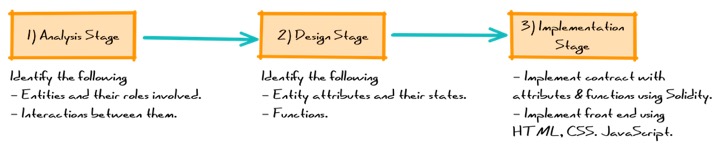

On building a blockchain application, following stages can be applied.

Analysis Stage: Identify the entities involved, their roles and types of interactions between them.

Design Stage: Model the entity attributes as state variable and interactions between them as functions. Also capture dependencies and constraints.

Implementation Stage: Implement the contract, front-end and back-end application.

The templates are defined below:

One-to-One: TBD

Many-to-One: TBD

Many-to-Many:TBD

Analysis Stage: Identify the entities involved, their roles and types of interactions between them.

Design Stage: Model the entity attributes as state variable and interactions between them as functions. Also capture dependencies and constraints.

Implementation Stage: Implement the contract, front-end and back-end application.

The templates are defined below:

One-to-One: TBD

Many-to-One: TBD

Many-to-Many:TBD

Blockchain Architecture - Part 1: Introduction

04/01/18 22:23 Filed in: Blockchain

Part 2: Application Templates

Blockchain is a distributed and public ledger which maintains records of all transactions. A blockchain network is a truly peer-to-peer network and it does not require a trusted central authority intermediaries to authenticate or control the network infrastructure. With the blockchain’s ability to establish trust in a peer-to-peer network through a distributed consensus mechanism rather than relying on central authority. Blockchain has the potential to distrust not just the financial industry but also other industries including manufacturing, supply chain, logistics and healthcare.

There are two main parts in the Blockchain: the blockchain network, and the blockchain code. The blockchain network is the infrastructure where group of organizations to share data and processes together on a blockchain. The blockchain code runs on the blockchain network, and defines the organizations who can participate in the network. There are different categories of Blockchain including permissive (private), and public Blockchain models. A public blockchain network is completely open and anyone can join and participate in the network. The network typically has an incentivizing mechanism to encourage more participants to join the network. Bitcoin is one of the largest public blockchain networks in production today. A private blockchain network requires an invitation and must be validated by either the network starter or by a set of rules put in place by the network starter. Businesses who set up a private blockchain, will generally set up a permission network. This places restrictions on who is allowed to participate in the network, and only in certain transactions. Participants need to obtain an invitation or permission to join.

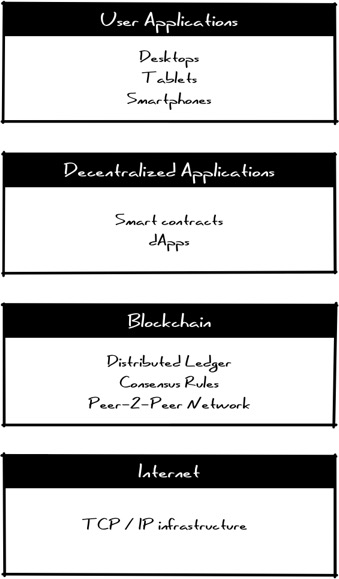

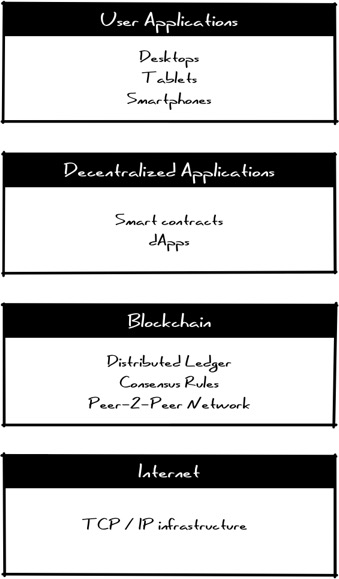

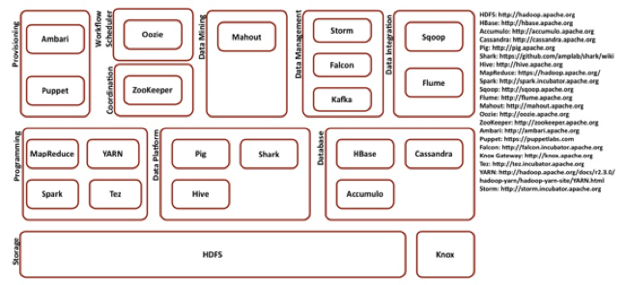

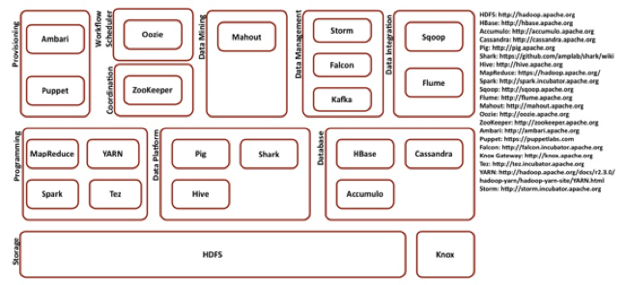

Blockchain operates on technology stack described below.

The blockchain protocol operates on top of internet stack through a peer-to-peer network of nodes. The stack is responsible for executing protocol, completing transactions based on a cryptographic consensus algorithm. The recorded transactions cannot be changed and can be inspected by anyone.

Decentralized applications (dApps) are applications that run on a P2P network of computers rather than a single computer. dApps are similar to a conventional web application. The front end uses the exact same technology to render the page. The one critical difference is that instead of an API connecting to a Database, you have a Smart Contract connecting to a blockchain. dApp enabled website: Front End → Smart Contract → Blockchain. As opposed to traditional, centralized applications, where the backend code is running on centralized servers, dApps have their backend code running on a decentralized P2P network. Customer interaction is done through user application layer. This is the consumer-facing part of the stack. These applications will work similar to current web or mobile applications but the main difference is they are built on decentralized protocols will be able to talk to each other.

Blockchain is a distributed and public ledger which maintains records of all transactions. A blockchain network is a truly peer-to-peer network and it does not require a trusted central authority intermediaries to authenticate or control the network infrastructure. With the blockchain’s ability to establish trust in a peer-to-peer network through a distributed consensus mechanism rather than relying on central authority. Blockchain has the potential to distrust not just the financial industry but also other industries including manufacturing, supply chain, logistics and healthcare.

There are two main parts in the Blockchain: the blockchain network, and the blockchain code. The blockchain network is the infrastructure where group of organizations to share data and processes together on a blockchain. The blockchain code runs on the blockchain network, and defines the organizations who can participate in the network. There are different categories of Blockchain including permissive (private), and public Blockchain models. A public blockchain network is completely open and anyone can join and participate in the network. The network typically has an incentivizing mechanism to encourage more participants to join the network. Bitcoin is one of the largest public blockchain networks in production today. A private blockchain network requires an invitation and must be validated by either the network starter or by a set of rules put in place by the network starter. Businesses who set up a private blockchain, will generally set up a permission network. This places restrictions on who is allowed to participate in the network, and only in certain transactions. Participants need to obtain an invitation or permission to join.

Blockchain operates on technology stack described below.

The blockchain protocol operates on top of internet stack through a peer-to-peer network of nodes. The stack is responsible for executing protocol, completing transactions based on a cryptographic consensus algorithm. The recorded transactions cannot be changed and can be inspected by anyone.

Decentralized applications (dApps) are applications that run on a P2P network of computers rather than a single computer. dApps are similar to a conventional web application. The front end uses the exact same technology to render the page. The one critical difference is that instead of an API connecting to a Database, you have a Smart Contract connecting to a blockchain. dApp enabled website: Front End → Smart Contract → Blockchain. As opposed to traditional, centralized applications, where the backend code is running on centralized servers, dApps have their backend code running on a decentralized P2P network. Customer interaction is done through user application layer. This is the consumer-facing part of the stack. These applications will work similar to current web or mobile applications but the main difference is they are built on decentralized protocols will be able to talk to each other.

Add sudo user in Ubuntu

15/06/17 18:48 Filed in: Devops

The sudo users are granted with administrator privileges to perform administrative tasks. This guide will show you the easiest way to create a new user with sudo access on Ubuntu, without having to modify your server's sudoers file. If you want to configure sudo for an existing user, simply skip to step 3.

Steps to Create a New Sudo User. Be sure to replace server and user name with your name.

Log in to your server as the root user.

$ssh root@SERVER

Use the adduser command to add a new user to your system.

$adduser username

Set and confirm the new user's password at the prompt. A strong password is highly recommended. Set password prompts:

$Enter new UNIX password:

$Retype new UNIX password:

$passwd: password updated successfully

Follow the prompts to set the new user's information. It is fine to accept the defaults to leave all of this information blank.

$Enter the new value, or press ENTER for the default

Full Name []:

Room Number []:

Work Phone []:

Home Phone []:

Other []:

Is the information correct? [Y/n]

Use the usermod command to add the user to the sudo group.

$usermod -aG sudo username

Test sudo access on new user account. Use the su command to switch to the new user account.

$su - username

As the new user, verify that you can use sudo by prepending "sudo" to the command that you want to run with superuser privileges.

$sudo command_to_run

For example, you can list the contents of the /root directory, which is normally only accessible to the root user.

$sudo ls -la /root

The first time you use sudo in a session, you will be prompted for the password of the user account. Enter the password to proceed.

Output:

[sudo] password for username:

If your user is in the proper group and you entered the password correctly, the command that you issued with sudo should run with root privileges.

Steps to Create a New Sudo User. Be sure to replace server and user name with your name.

Log in to your server as the root user.

$ssh root@SERVER

Use the adduser command to add a new user to your system.

$adduser username

Set and confirm the new user's password at the prompt. A strong password is highly recommended. Set password prompts:

$Enter new UNIX password:

$Retype new UNIX password:

$passwd: password updated successfully

Follow the prompts to set the new user's information. It is fine to accept the defaults to leave all of this information blank.

$Enter the new value, or press ENTER for the default

Full Name []:

Room Number []:

Work Phone []:

Home Phone []:

Other []:

Is the information correct? [Y/n]

Use the usermod command to add the user to the sudo group.

$usermod -aG sudo username

Test sudo access on new user account. Use the su command to switch to the new user account.

$su - username

As the new user, verify that you can use sudo by prepending "sudo" to the command that you want to run with superuser privileges.

$sudo command_to_run

For example, you can list the contents of the /root directory, which is normally only accessible to the root user.

$sudo ls -la /root

The first time you use sudo in a session, you will be prompted for the password of the user account. Enter the password to proceed.

Output:

[sudo] password for username:

If your user is in the proper group and you entered the password correctly, the command that you issued with sudo should run with root privileges.

Setting up Go development environment

02/03/17 21:39 Filed in: Programming | Golang

Go is fast growing programming language, so I thought it would be helpful to show how to setup and run Go programs on your machine. The instruction is for Mac OS X. Homebrew is required. If you don't have home-brew in your local environment, follow this link to setup home brew in your Mac development environment.

https://brew.sh/

$ brew install go

In order to run Go within your terminal, you’ll need to setup the GOPATH environment variable. This will allow Go to properly locate your workspace in order to run your code and manage your packages. I have my workspace "code" inside my home directory. ($HOME/code") and or Golang I have in $HOME/code/go. I'm using bash profile to setup GOPATH.

1. Edit ~/.bash_profile.

2. Add following lines to bash_profile

export GOPATH="$HOME/code/go"

export GOROOT=/usr/local/opt/go/libexec

export PATH=$PATH:$GOROOT/bin:$GOPATH/bin

3. I.e. source .bash_profile file or close and open terminal.

$ source ~/.bash_profile

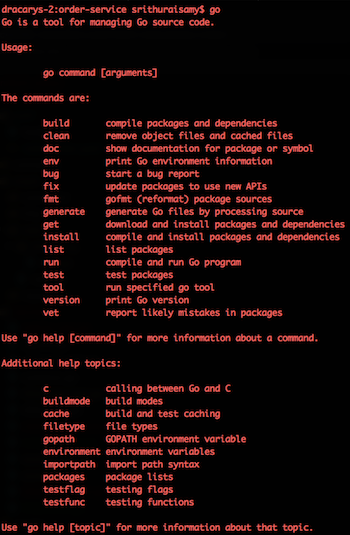

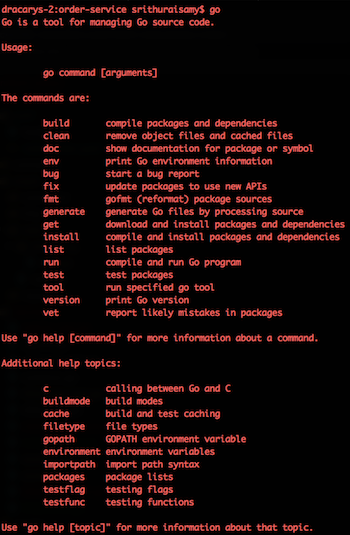

After the setup when you type go you should see as below.

https://brew.sh/

$ brew install go

In order to run Go within your terminal, you’ll need to setup the GOPATH environment variable. This will allow Go to properly locate your workspace in order to run your code and manage your packages. I have my workspace "code" inside my home directory. ($HOME/code") and or Golang I have in $HOME/code/go. I'm using bash profile to setup GOPATH.

1. Edit ~/.bash_profile.

2. Add following lines to bash_profile

export GOPATH="$HOME/code/go"

export GOROOT=/usr/local/opt/go/libexec

export PATH=$PATH:$GOROOT/bin:$GOPATH/bin

3. I.e. source .bash_profile file or close and open terminal.

$ source ~/.bash_profile

After the setup when you type go you should see as below.

Setup Docker in Ubuntu 14.04

10/09/14 16:51 Filed in: Cloud architecture | Docker

Overview

We are entering an age of open cloud infrastructure and application portability. The applications/services are required to scale in/out as per demand and need to be executed in multiple host environment. For this applications are required to run within shell, and the shell/container needs to work across several hosts and be portable to any cloud environment.

Docker is a container-based software framework for automating deployment of applications, makes it easy to partition a single host into multiple containers. However, although useful, many applications require resources beyond a single host, and real-world deployments require multiple hosts for resilience, fault tolerance and easy scaling of applications.

Installtion

These instructions describe to setup latest version of Docker for Ubuntu 14.04 for not latest release from Docker. Following are the list of commands.

To setup latest Docker release, add the Docker repository key to your local keychain and process the install commands

After install the Docker you can search any community containers. For e.g if you need to search for any debian package,

We are entering an age of open cloud infrastructure and application portability. The applications/services are required to scale in/out as per demand and need to be executed in multiple host environment. For this applications are required to run within shell, and the shell/container needs to work across several hosts and be portable to any cloud environment.

Docker is a container-based software framework for automating deployment of applications, makes it easy to partition a single host into multiple containers. However, although useful, many applications require resources beyond a single host, and real-world deployments require multiple hosts for resilience, fault tolerance and easy scaling of applications.

Installtion

These instructions describe to setup latest version of Docker for Ubuntu 14.04 for not latest release from Docker. Following are the list of commands.

- sudo apt-get update

- sudo apt-get install docker.io

- sudo ln -sf /usr/bin/docker.io /usr/local/bin/docker

- sudo sed -i '$acomplete -F _docker docker' /etc/bash_completion.d/docker.io

To setup latest Docker release, add the Docker repository key to your local keychain and process the install commands

- $ sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys 36A1D7869245C8950F966E92D8576A8BA88D21E9

- $ sudo sh -c "echo deb https://get.docker.io/ubuntu docker main > /etc/apt/sources.list.d/docker.list"

- $ sudo apt-get update

- $ sudo apt-get install lxc-docker

After install the Docker you can search any community containers. For e.g if you need to search for any debian package,

- docker search debian

Setup ActiveMQ, Zookeeper, and Replicated LevelDB running in JDK 8 and CentOS

22/08/14 11:53 Filed in: Data pipeline

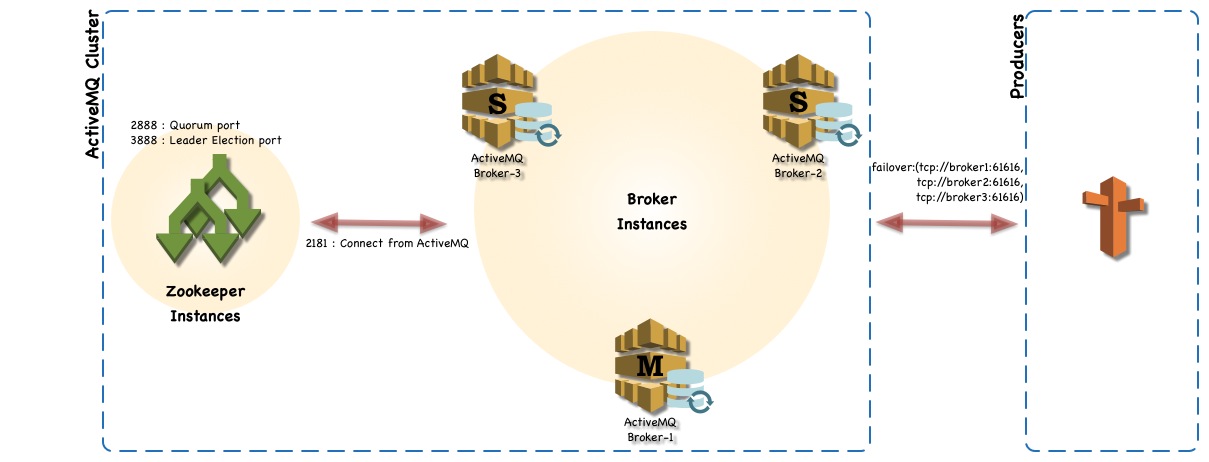

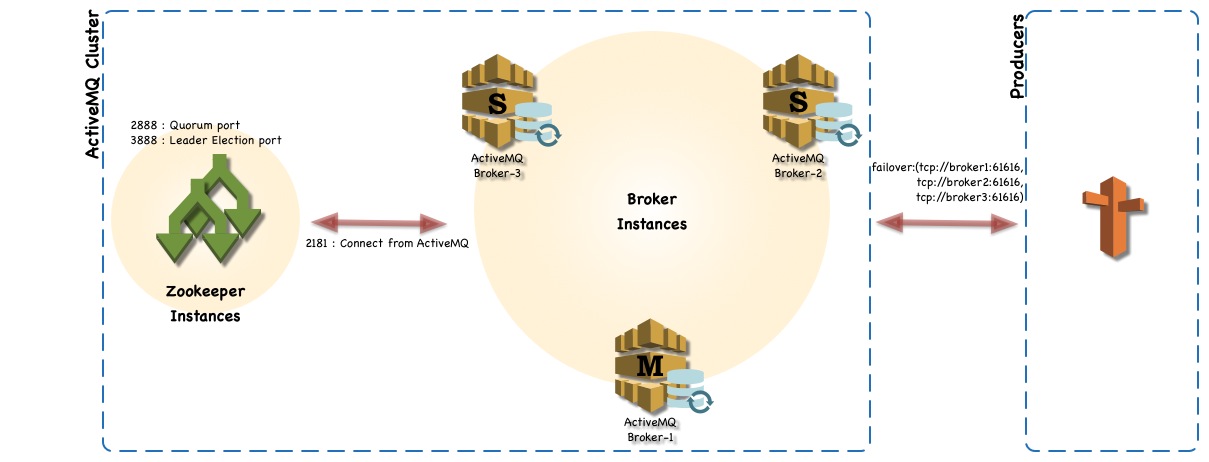

This guide describes the step-by-step guide to setup ActiveMQ to use replicated LevelDB persistence with Zookeeper. CentOS environment is used for servers. Zookeeper is used to replicate the LevelDB to support Master/Slave activeMQs. Three VMware instances are used with 2 Core processes, 2G RAM and 20G disk space. For simplicity, stop the IPtable service (firewall) in CentOS. If IPTable is required then you need to open set of ports. List of port numbers are included in pre-setup work section.

Overview

ActiveMQ cluster environment includes following

1 Three VM instances with CentOS os AND JDK 8

2 Three ActiveMQ instances.

3 Three Zookeeper instances.

Pre-install

Following ports are required to open in Iptables host firewall.

Installation

Java JDK

Zookeeper

Download activemq distribution from http://apache.mirror.nexicom.net/activemq/5.10.0/apache-activemq-5.10.0-bin.tar.gz

My approach was to get the software setup on a single VM instance in VM Ware fusion, and create two more clones to have three servers. I have named the instances as messageq1, messageq2, and messageq3. After starting instances confirm the myid file and IP address in the zoo.cfg are setup properly with new instance’s ip address.

After configured everything

No IOExceptionHandler registered, ignoring IO exception | org.apache.activemq.broker.BrokerService | LevelDB IOExcepti

on handler.

java.io.IOException: com.google.common.base.Objects.firstNonNull(Ljava/lang/Object;Ljava/lang/Object Ljava/lang/Object;

Ljava/lang/Object;

at org.apache.activemq.util.IOExceptionSupport.create(IOExceptionSupport.java:39)[activemq-client-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.LevelDBClient.might_fail(LevelDBClient.scala:552)[activemq-leveldb-store-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.LevelDBClient.replay_init(LevelDBClient.scala:657)[activemq-leveldb-store-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.LevelDBClient.start(LevelDBClient.scala:558)[activemq-leveldb-store-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.DBManager.start(DBManager.scala:648)[activemq-leveldb-store-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.LevelDBStore.doStart(LevelDBStore.scala:235)[activemq-leveldb-store-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.replicated.MasterLevelDBStore.doStart(MasterLevelDBStore.scala:110)[activemq-leveldb-store-5.10.0.jar:5.10.0]

at org.apache.activemq.util.ServiceSupport.start(ServiceSupport.java:55)[activemq-client-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.replicated.ElectingLevelDBStore$$anonfun$start_master$1.apply$mcV$sp(ElectingLevelDBStore.scala:226)[activemq-lev

eldb-store-5.10.0.jar:5.10.0]

at org.fusesource.hawtdispatch.package$$anon$4.run(hawtdispatch.scala:330)[hawtdispatch-scala-2.11-1.21.jar:1.21]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)[:1.8.0_20]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)[:1.8.0_20]

After going through tickets in activeMQ found following ticket has been reported https://issues.apache.org/jira/browse/AMQ-5225. Workaround described in the ticket will solve the issue. The work around for this issue,

To confirm ActiveMQ listening for request

◦ In master, check with netstat -an | grep 61616 and confirm the port is in listen mode.

◦ In slaves, you can run netstat -an | grep 6161 and output should show you slave binding port 61619

Post-Install

◦ For Zookeeper, set the Java heap size. This is very important to avoid swapping, which will seriously degrade ZooKeeper performance. To determine the correct value, use load tests, and make sure you are well below the usage limit that would cause you to swap. Be conservative - use a maximum heap size of 3GB for a 4GB machine.

◦ Increase the open file number to support 51200. E.g: limit -n 51200.

◦ Review linux network setting parameters : http://www.nateware.com/linux-network-tuning-for-2013.html#.VA8pN2TCMxo

◦ Review ActiveMQ transports configuration settings : http://activemq.apache.org/configuring-transports.html

◦ Review ActiveMQ persistence configuration settings : http://activemq.apache.org/persistence.html

◦ Review zookeeper configuration settings : http://zookeeper.apache.org/doc/trunk/zookeeperAdmin.html#sc_configuration

Reference

◦ tickTime: the length of a single tick, which is the basic time unit used by ZooKeeper, as measured in milliseconds. It is used to regulate heartbeats, and timeouts. For example, the minimum session timeout will be two ticks.

◦ initLimit: Amount of time, in ticks , to allow followers to connect and sync to a leader. Increased this value as needed, if the amount of data managed by ZooKeeper is large.

◦ syncLimit: Amount of time, in ticks , to allow followers to sync with ZooKeeper. If followers fall too far behind a leader, they will be dropped.

◦ clientPort: The port to listen for client connections; that is, the port that clients attempt to connect to.

◦ dataDir: The location where ZooKeeper will store the in-memory database snapshots and, unless specified otherwise, the transaction log of updates to the database.

ActiveMQ configuration file from msgq1

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://activemq.apache.org/schema/core http://activemq.apache.org/schema/core/activemq-core.xsd">

file:${activemq.conf}/credentials.properties

directory=“~/Dev/server/activemq/data/leveldb"

hostname="192.168.163.160"/>

ZK Configuration file

tickTime=2000

initLimit=5

syncLimit=2

dataDir=/home/sthuraisamy/Dev/server/data/zk

clientPort=2181

server.1=192.168.163.160:2888:3888

server.2=192.168.163.161:2888:3888

server.3=192.168.163.162:2888:3888

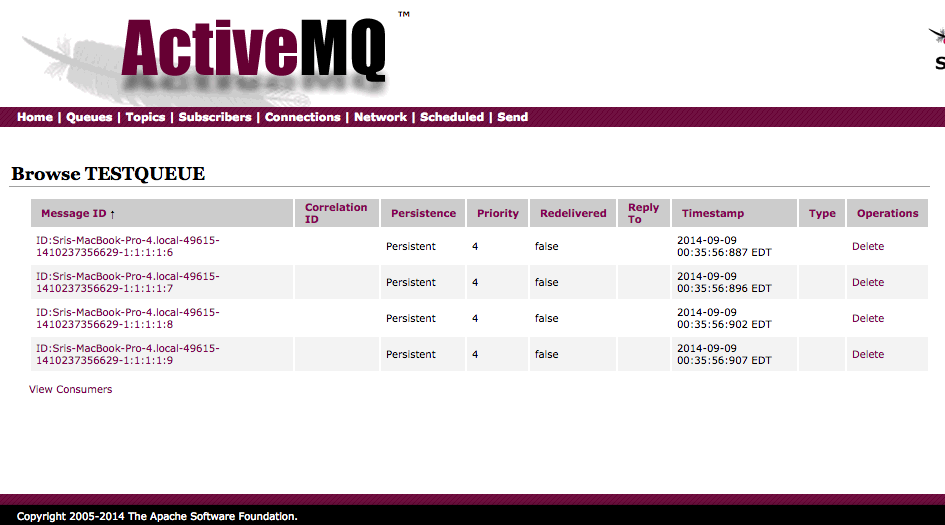

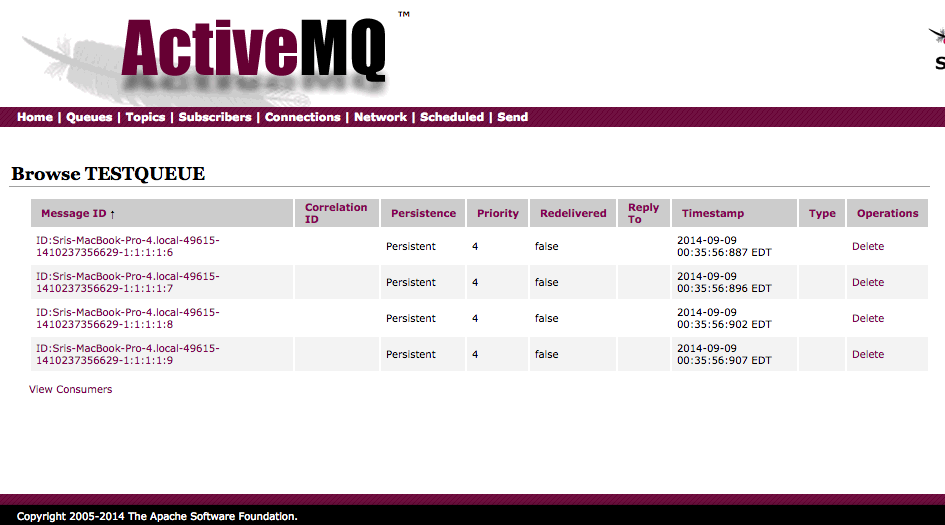

In ActiveMQ 5.10 web console you can view and delete the pending messages in a Queue

Overview

ActiveMQ cluster environment includes following

1 Three VM instances with CentOS os AND JDK 8

2 Three ActiveMQ instances.

3 Three Zookeeper instances.

Pre-install

Following ports are required to open in Iptables host firewall.

- Zookeeper

- 2181 – the port that clients will use to connect to the ZK ensemble

- 2888 – port used by ZK for quorum election

- 3888 – port used by ZK for leader election

- ActiveMQ

- 61616 – default Openwire port

- 8161 – Jetty port for web console

- 61619 – LevelDB peer-to-peer replication port for ActiveMQ slaves.

- To check Iptables status.

- service iptables status

- To stop iptables service

- service iptables save

- service iptables stop

- chkconfig iptables off

- To start again

- service iptables start

- chkconfig iptables on

- Setup proper hostname, edit following files

- Update HOSTNAME value in /etc/sysconfig/network : E.g: HOSTNAME=msgq1.dev.int

- Add host name with IP address of the machine in /etc/hosts: E.g: 192.168.163.160 msgq1.dev.int msgqa1

- Restart the instance and repeat same process each instances.

Installation

Java JDK

- Download JDK 8 from http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

- Extract JdK-8*.tar.gz to a folder of your choice (I used ~/Dev/server). This will create folder ~/Dev/server/jdk1.8.0_20.

- Set up JAVA_HOME directory.

- To setup for all users edit /etc/profile and add following line export JAVA_HOME=HOME_DIR/Dev/server/jdk1.8.0_20.

Zookeeper

- Download zookeeper from site http://zookeeper.apache.org/

- Extract the file into your folder of your choice. I have used ~/Dev/server/

- Create soft link zookeeper for extracted directory.

- For reliable ZooKeeper service, the ZK should be deployed in a cluster mode knows as ensemble. As long as a majority of the ensemble are up, the service will be available.

- Goto conf directory and create zookeeper configuration directory.

- cd

/conf - Copy zoo_sample.cfg to zoo.cfg

- Make sure the file has following lines

- tickTime=2000initLimit=5

syncLimit=2

dataDir=~/Dev/server/data/zk

clientPort=2181

- tickTime=2000initLimit=5

- Add following lines into zoo.cfg at the end.

- server.1=zk1_IPADDRESS:2888:3888

server.2=zk2_IPADDRESS:2888:3888

server.3=zk3_IPADDRESS:2888:3888

- server.1=zk1_IPADDRESS:2888:3888

- zk1, zk2 & zk3 are IP addresses for the ZK servers.

- Port 2181 is used to communicate with client

- Port 2888 is used by peer ZK servers to communicate among themselves (Quorum port)

- Port 3888 is used for leader election (Leader election port).

- The last three lines of the server.id=host:port:port format specifies that there are three nodes in the ensemble. In an ensemble, each ZooKeeper node must have a unique ID between 1 and 255. This ID is defined by creating a file named myid in the dataDir directory of each node. For example, the node with the ID 1 (server.1=zk1:2888:3888) will have a myid file at /home/sthuraisamy/Dev/server/data/zk with the text 1 inside it.

- Create myid file in data directory for zk1 ( server.1) and for other ZK servers as 2 & 3.

- echo 1 > myid

Download activemq distribution from http://apache.mirror.nexicom.net/activemq/5.10.0/apache-activemq-5.10.0-bin.tar.gz

- Extract the file into your folder of your choice. I have used ~/Dev/server/

- Create soft link activemq for extracted directory.

- Do following

- cd

/bin

- cd

- chmod 755 activemq

/bin/activemq start

- To confirm the activemq is listening on port 61616 or check the log file and confirm port listening messages are populated.

- netstat -an|grep 61616

- In activemq config file, following bean classes define the settings

- PropertyPlaceholderConfigurer

- Credentials

- Broker section

- constantPendingMessageLimitStrategy: limit the number of messages to be keep in memory for slow consumers.

- Other settings to handle slower consumers, refer http://activemq.apache.org/slow-consumer-handling.html

- Persistence adapter to define the storage to keep the messages.

- For better performance

- Use NIO : Refer http://activemq.apache.org/configuring-transports.html#ConfiguringTransports-TheNIOTransport

- Replicated LevelDB store using Zookeeper http://activemq.apache.org/replicated-leveldb-store.html

- The settings need to be done in ActiveMQ after zookeeper is setup. Add following lines into conf/activemq.xml

- hostname should be assigned with separate IP address for each instance.

My approach was to get the software setup on a single VM instance in VM Ware fusion, and create two more clones to have three servers. I have named the instances as messageq1, messageq2, and messageq3. After starting instances confirm the myid file and IP address in the zoo.cfg are setup properly with new instance’s ip address.

After configured everything

- Start the Zookeeper instances in all three nodes :

/bin/zk_Server.sh start - Start the activeMQ instances in all three nodes :

/bin/activemq start - In my setup when I start the first node I didn’t find any issue. After I have started the second node, I found exception in the log file.

No IOExceptionHandler registered, ignoring IO exception | org.apache.activemq.broker.BrokerService | LevelDB IOExcepti

on handler.

java.io.IOException: com.google.common.base.Objects.firstNonNull(Ljava/lang/Object;Ljava/lang/Object

at org.apache.activemq.util.IOExceptionSupport.create(IOExceptionSupport.java:39)[activemq-client-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.LevelDBClient.might_fail(LevelDBClient.scala:552)[activemq-leveldb-store-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.LevelDBClient.replay_init(LevelDBClient.scala:657)[activemq-leveldb-store-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.LevelDBClient.start(LevelDBClient.scala:558)[activemq-leveldb-store-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.DBManager.start(DBManager.scala:648)[activemq-leveldb-store-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.LevelDBStore.doStart(LevelDBStore.scala:235)[activemq-leveldb-store-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.replicated.MasterLevelDBStore.doStart(MasterLevelDBStore.scala:110)[activemq-leveldb-store-5.10.0.jar:5.10.0]

at org.apache.activemq.util.ServiceSupport.start(ServiceSupport.java:55)[activemq-client-5.10.0.jar:5.10.0]

at org.apache.activemq.leveldb.replicated.ElectingLevelDBStore$$anonfun$start_master$1.apply$mcV$sp(ElectingLevelDBStore.scala:226)[activemq-lev

eldb-store-5.10.0.jar:5.10.0]

at org.fusesource.hawtdispatch.package$$anon$4.run(hawtdispatch.scala:330)[hawtdispatch-scala-2.11-1.21.jar:1.21]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)[:1.8.0_20]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)[:1.8.0_20]

After going through tickets in activeMQ found following ticket has been reported https://issues.apache.org/jira/browse/AMQ-5225. Workaround described in the ticket will solve the issue. The work around for this issue,

- remove pax-url-aether-1.5.2.jar from lib directory

- comment out the log query section

To confirm ActiveMQ listening for request

◦ In master, check with netstat -an | grep 61616 and confirm the port is in listen mode.

◦ In slaves, you can run netstat -an | grep 6161 and output should show you slave binding port 61619

Post-Install

◦ For Zookeeper, set the Java heap size. This is very important to avoid swapping, which will seriously degrade ZooKeeper performance. To determine the correct value, use load tests, and make sure you are well below the usage limit that would cause you to swap. Be conservative - use a maximum heap size of 3GB for a 4GB machine.

◦ Increase the open file number to support 51200. E.g: limit -n 51200.

◦ Review linux network setting parameters : http://www.nateware.com/linux-network-tuning-for-2013.html#.VA8pN2TCMxo

◦ Review ActiveMQ transports configuration settings : http://activemq.apache.org/configuring-transports.html

◦ Review ActiveMQ persistence configuration settings : http://activemq.apache.org/persistence.html

◦ Review zookeeper configuration settings : http://zookeeper.apache.org/doc/trunk/zookeeperAdmin.html#sc_configuration

Reference

◦ tickTime: the length of a single tick, which is the basic time unit used by ZooKeeper, as measured in milliseconds. It is used to regulate heartbeats, and timeouts. For example, the minimum session timeout will be two ticks.

◦ initLimit: Amount of time, in ticks , to allow followers to connect and sync to a leader. Increased this value as needed, if the amount of data managed by ZooKeeper is large.

◦ syncLimit: Amount of time, in ticks , to allow followers to sync with ZooKeeper. If followers fall too far behind a leader, they will be dropped.

◦ clientPort: The port to listen for client connections; that is, the port that clients attempt to connect to.

◦ dataDir: The location where ZooKeeper will store the in-memory database snapshots and, unless specified otherwise, the transaction log of updates to the database.

ActiveMQ configuration file from msgq1

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://activemq.apache.org/schema/core http://activemq.apache.org/schema/core/activemq-core.xsd">

directory=“~/Dev/server/activemq/data/leveldb"

hostname="192.168.163.160"/>

ZK Configuration file

tickTime=2000

initLimit=5

syncLimit=2

dataDir=/home/sthuraisamy/Dev/server/data/zk

clientPort=2181

server.1=192.168.163.160:2888:3888

server.2=192.168.163.161:2888:3888

server.3=192.168.163.162:2888:3888

In ActiveMQ 5.10 web console you can view and delete the pending messages in a Queue

Streaming Data

21/08/14 00:49 Filed in: Data pipeline

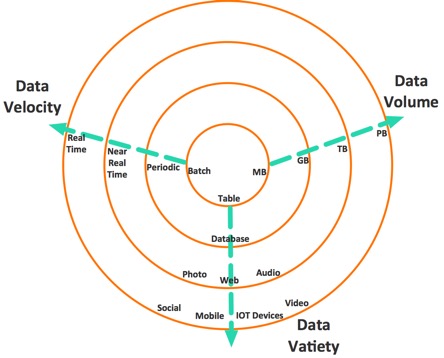

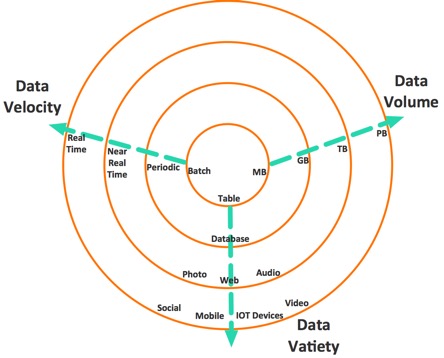

BigData is a collection of data set so large and complex that it becomes difficult to process using on-hand database management tools or traditional applications. The datasets not only contain structured datasets, but also include unstructured datasets. Big data has three characteristics:

The diagram explains the characteristics.

Recently, more and more is getting easily available as streams. The stream data item can be classified into 5Ws data dimensions.

Conventional data processing technologies are now unable to process these kind of volume data within a tolerable elapsed time. In-memory databases also have certain key problems such as larger data size may not fit into memory, moving all data sets into centralized machine is too expensive. To process data as they arrive, the paradigm has changed from the conventional “one-shot” data processing approach to elastic and virtualized datacenter cloud-based data processing frameworks that can mine continuos, high-volume, open-ended data streams.

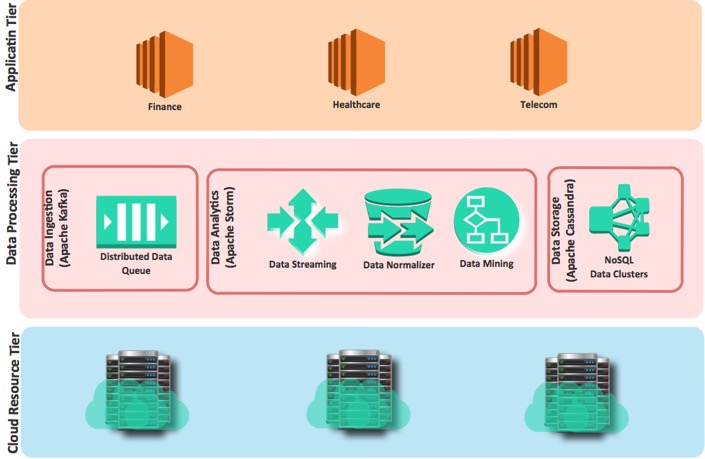

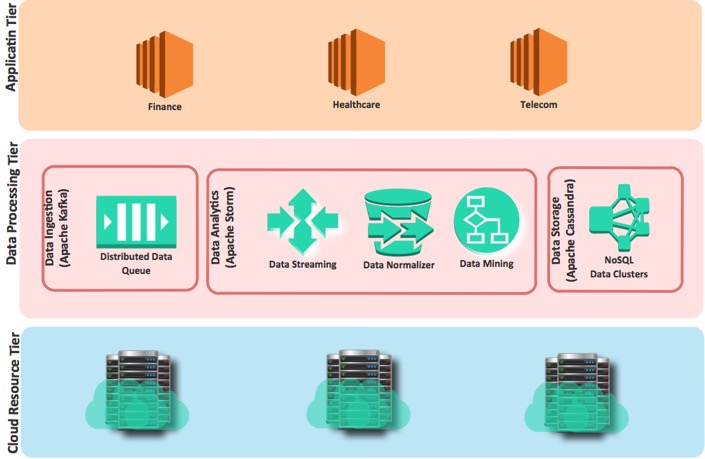

The framework contains three main components.

Following diagram explain these components.

- Volume

- Velocity.

- Variety.

The diagram explains the characteristics.

Recently, more and more is getting easily available as streams. The stream data item can be classified into 5Ws data dimensions.

- What the data is? Video, Image, Text, Number

- Where the data came from? From twitter, Smart phone, Hacker

- When the data occurred? The timestamp of data incidence.

- Who received the data? Friend, Bank account, victim

- Why the data occurred? Sharing photos, Finding new friends, Spreading a virus

- How the data was transferred? By Internet, By email, Online transferred

Conventional data processing technologies are now unable to process these kind of volume data within a tolerable elapsed time. In-memory databases also have certain key problems such as larger data size may not fit into memory, moving all data sets into centralized machine is too expensive. To process data as they arrive, the paradigm has changed from the conventional “one-shot” data processing approach to elastic and virtualized datacenter cloud-based data processing frameworks that can mine continuos, high-volume, open-ended data streams.

The framework contains three main components.

- Data Ingestion: Accepts the data from multiple sources such as social networks, online services, etc.

- Data Analytics: Consist many systems to read, analyze, clean and normalize.

- Data Storage: Provide to store and index data sets.

Following diagram explain these components.

PCI DSS 3.0

31/07/14 15:12 Filed in: Security

The Payment Card Industry Data Security Standard (PCI DSS) was developed by following payment entities.

PCI DSS mandates set of requirements and processes for security management, policies, procedures, network architecture, software design and critical protective measures. The requirement must be met by all organizations (merchants and service providers) that transmit, process or store payment card data, or directly or indirectly affect the security of cardholder data. If an organization uses a third party to manage cardholder data, the organization has a responsibility to ensure that this third party is compliant with the PCI DSS. The requirements are published and controlled by the independent PCI Security Standards Council (SSC) defines qualifications for Qualified Security Assessors (QSAs), Internal Security Assessors (ISA), PCI Forensic Investigators (PFI), PCI Professionals (PCIP), Qualified Integrators and Resellers (QIR), and Approved Scanning Vendors (ASVs). It trains,

Key definitions2 and acronyms in the PCI DSS:

PCI DSS applies to all processes, people and technology, and all system components, including network components, servers, or applications that are included in or connected to the cardholder data environment. It also applies to telephone recording technology used by call centres that accept payment card transactions.

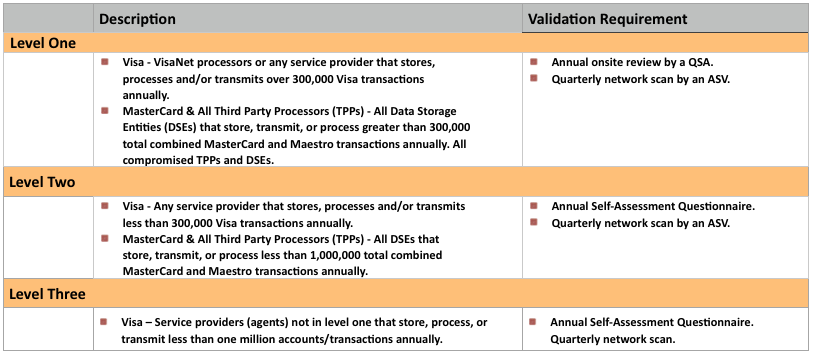

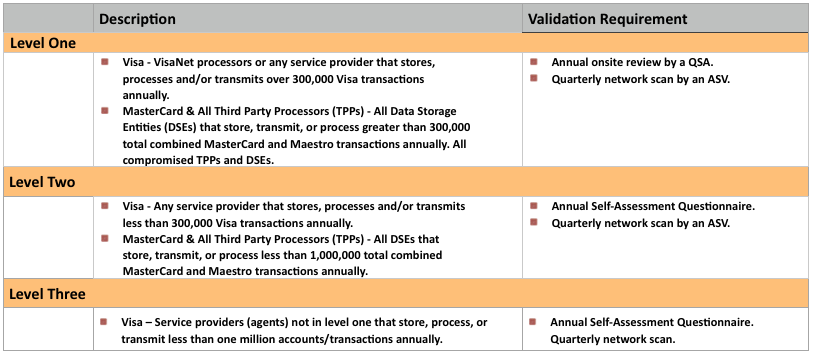

Service provider compliance levels are described in below table:

PCI DSS Procedures

Install and maintain a firewall configuration to protect cardholder data

Do not use vendor-supplied defaults for system passwords and other security parameters

Protect stored cardholder data

Encrypt transmission of cardholder data across open, public networks

Protect all systems against malware and regularly update anti-virus software or programs

Develop and maintain secure systems and applications

Restrict access to cardholder data by business need-to-know

Identify and authenticate access to system components

Restrict physical access to cardholder data

Track and monitor all access to network resources and cardholder data

Regularly test security systems and processes

Maintain a policy that addresses information security for all personnel

- American Express

- Discover Financial Services

- JCB International

- MasterCard Worldwide

- Visa.

PCI DSS mandates set of requirements and processes for security management, policies, procedures, network architecture, software design and critical protective measures. The requirement must be met by all organizations (merchants and service providers) that transmit, process or store payment card data, or directly or indirectly affect the security of cardholder data. If an organization uses a third party to manage cardholder data, the organization has a responsibility to ensure that this third party is compliant with the PCI DSS. The requirements are published and controlled by the independent PCI Security Standards Council (SSC) defines qualifications for Qualified Security Assessors (QSAs), Internal Security Assessors (ISA), PCI Forensic Investigators (PFI), PCI Professionals (PCIP), Qualified Integrators and Resellers (QIR), and Approved Scanning Vendors (ASVs). It trains,

Key definitions2 and acronyms in the PCI DSS:

- Acquirer: Bank, which acquires merchants.

- Payment brand: Visa, MasterCard, Amex, Discover, JCB.

- Merchant: Sells products to cardholders.

- Service provider: A business entity, directly or indirectly involved in the processing, storage, transmission and switching of cardholder data.

- PAN (Primary Account Number): The 16 digit payment card number.

- TPPs (Third Party Processors): Who process payment card transactions.

- DSEs (Data Storage Entities): Who store or transmit payment card data.

- QSA (Qualified Security Assessor): Someone who is trained and certified to carry out PCI DSS compliance assessments.

- ISA (Internal Security Assessor): Someone who is trained and certified to conduct internal security assessments.

- ASV (Approved Scanning Vendor): An organization that is approved as competent to carry out the security scans required by PCI DSS.

- PFI (PCI Forensic Investigator): An individual trained and certified to investigate and contain information security breaches involving cardholder data.

- CDE (Cardholder Data Environment): Any network that possesses cardholder data or sensitive authentication data.

PCI DSS applies to all processes, people and technology, and all system components, including network components, servers, or applications that are included in or connected to the cardholder data environment. It also applies to telephone recording technology used by call centres that accept payment card transactions.

Service provider compliance levels are described in below table:

PCI DSS Procedures

Install and maintain a firewall configuration to protect cardholder data